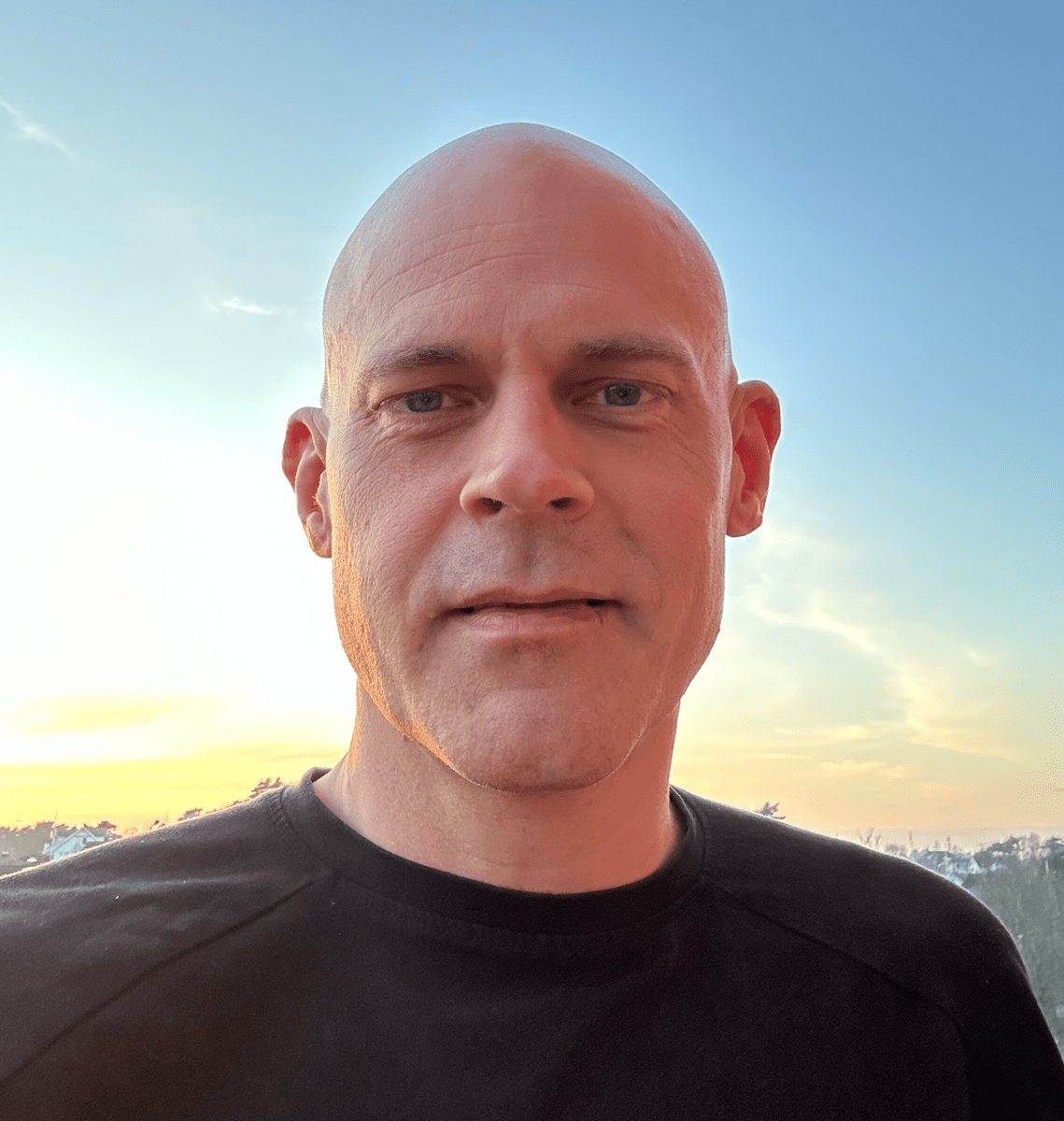

A brief chat with Christoffer Petersson

Deep Learning & AI

Meet our employees from different parts of Zenseact when they share their story, insights and experiences.

Who are you? Tell us about yourself.

I’m a technical expert in deep learning at Zenseact and an adjunct associate professor at Chalmers. I work at the intersection of research and product development in AI, focusing on leveraging data and learning to enable more scalable, intelligent, and safer autonomous driving and driver-assistance systems.

Why do we use AI in our software?

We use AI to make our software more intelligent, robust, and ultimately safer. Many critical tasks for safe driving, like detecting and classifying objects, are better handled by AI models than traditional methods. These models offer the level of accuracy and robustness necessary to ensure our software operates safely and compliantly in real-world environments.

Our software is partly based on AI. How do we ensure that the AI-driven functions behave correctly?

We invest significant time and effort into validating our AI models. Importantly, we don't let our software act blindly based on AI outputs. While AI helps with perception tasks such as detecting objects, estimating velocity, predicting behavior, and proposing how our vehicle should drive, we have an additional safety layer – guardrails – that check these proposals for consistency, safety, and compliance with traffic rules. This allows us to leverage the strengths of AI without compromising safety.

What is meant by “end-to-end driving”?

End-to-end driving refers to using one single, large AI model that takes raw sensor data and directly outputs driving commands like steering, acceleration, and braking. That is not our approach. While we do use AI to propose driving actions, we always apply safety guardrails to verify the proposals and have backup systems to ensure safety. So, decisions never go directly from AI to execution without oversight.

Are we using AI for all parts of the software?

No, we are not using AI for all parts of the software. AI is excellent for tasks like object detection, prediction, and driving proposals. However, real-world traffic can present unexpected and rare events that AI may not have seen in training. That's why we combine AI with traditional software engineering, safety guardrails, and fallback mechanisms to handle edge cases reliably.

What role does human oversight play in developing our software?

Human oversight is essential. Our engineers define safety boundaries, validate AI models, and take responsibility for ensuring the software is safe. We also use human feedback to improve training data. Ultimately, while AI is a powerful tool, it’s the people who ensure that our software performs safely and in compliance with regulations.

Why do we need AI if we can write code for any traffic situation?

The truth is, we can’t write code for every possible traffic situation. The real world is messy, full of unpredictable and ambiguous events. AI replaces the impossible task of coding for every scenario by learning from large, diverse datasets. This approach has proven to be highly effective and is key to handling the complexity of real-world driving.